Intel’s RealSense cameras are astonishingly precise but not as accurate. By optimising the calibration of the depth stream and correcting for non-linearity, the accuracy can be improved by an order of magnitude at 2.5 metres and becomes almost linear in the depth:

Sources of error

Calculating the coordinates of the 3D point corresponding to a depth reading is straightforward trigonometry – here‘s a quick refresher – but the accuracy of the results depends on several factors.

Accuracy of the intrinsics

The supplied Intel® RealSense™ Depth Module D400 Series Custom Calibration program uses the traditional method, displaying a chequerboard to the camera in various poses and solving for the intrinsics. There are several issues with this methodology:

- This method establishes the intrinsics solely for the colour camera.

- Although it resolves to sub-pixel accuracy, it does so on a single frame, which is imprecise. The results of 3D calculations are extremely sensitive to errors in the field-of-view: A one-degree error in the vertical field of view translates into >11mm error at 1 metre. Concomitant errors in the horizontal field of view make matters worse and they are quadratic in the depth.

- The depth stream is synthesised by the stereo depth module and the vision processor. Imperfections anywhere in the chain (unforeseen distortion, varying refraction at different wavelengths, heuristics in the algorithms, depth filtering) may negatively affect the accuracy. One cannot assume that an apparently perfect colour image will produce ideal results in the depth map.

Non-linearity

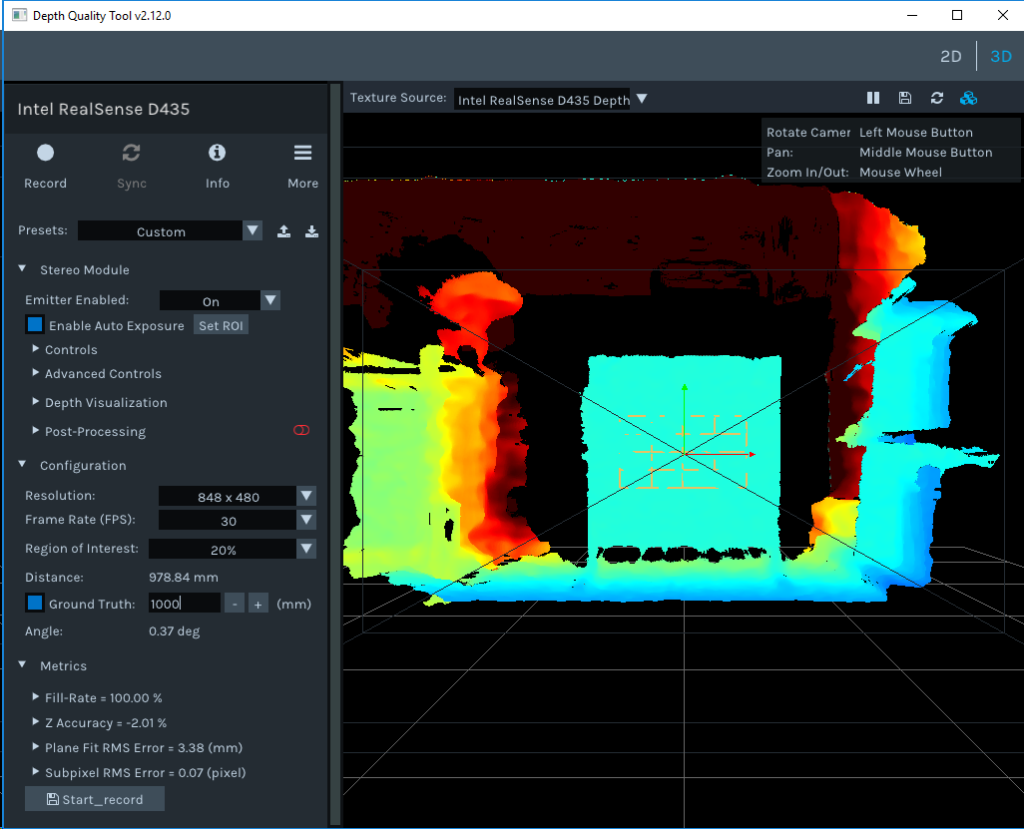

This is readily observed with the supplied DepthQuality tool. When viewing a target at a measured distance of 1’000 from the glass, the instantaneous reported depth is out by ~22mm:

By averaging depth measurements over a period, errors in precision can be eliminated. Averaged over 1’000’000 measurements, my out-of-the-box D435 reports a range of 980.70mm – an error of 19.3mm. This is within the specified accuracy 2%=20mm but increases quadratically, as is to be expected. Fortunately, this non-linearity appears to be constant for a given camera and once determined, can be eliminated.

Focal Point

The focal point of the depth map is supplied in the Intel RealSense D400 Series Datasheet, for a D435 it is defined as being 3.2mm behind of the glass. Presumably due to the manufacturing tolerances of ±3%, the focal point may in reality be tens of mm away.

Mounting

No matter how precisely the camera is mounted, there will be errors between the mounting and the camera’s true central axis. Knowing them improves the accuracy when translating from the camera frame to the parent (vehicle or world) frame.

Solution

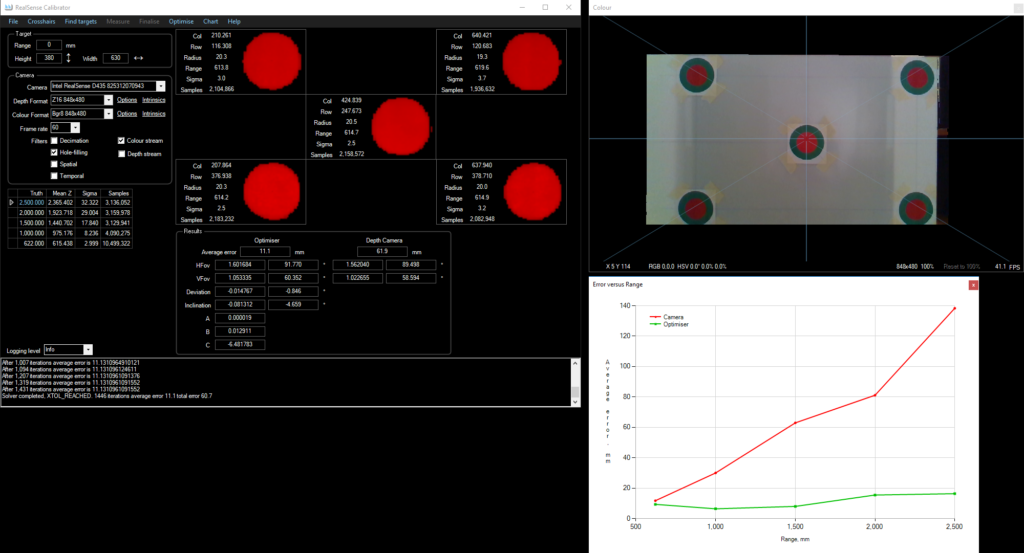

I have written a program that calibrates a camera based solely on measurements in the depth stream. It derives all the parameters discussed above by making several observations of a target with known dimensions. A much higher degree of accuracy is obtained by averaging over a large number of measurements. The optimal parameter values are then calculated, as a single problem, with a non-linear solver.

It is open-source, available on GitHub https://github.com/smirkingman/RealSense-Calibrator

Screenshot of an optimiser output:

Discussion

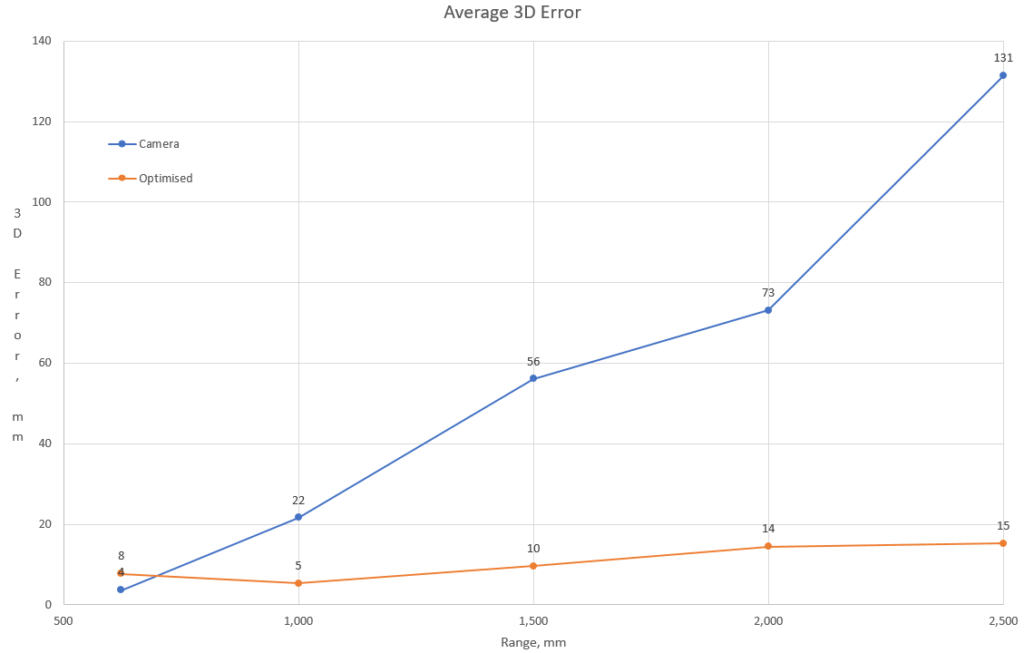

The comparisons presented above use the Z-range as the metric, as this is the metric in the reference documentation. The Z measure alone is only part of the answer, a more realistic metric is the 3D error of the point: the vector between the truth and the 3D point determined by the camera and software. Futhermore, just supplying a number doesn’t tell the whole story. Traditional error analysis supplies descriptive statistics, which give a value and a confidence known as the 68–95–99.7 rule, which allows us to make statements like “The error will be no more than Xmm 99.7% of the time” (3-sigma, or 3σ).

The 3D error – the length of the vector between the true coordinates of the point and what the camera+software reported is:

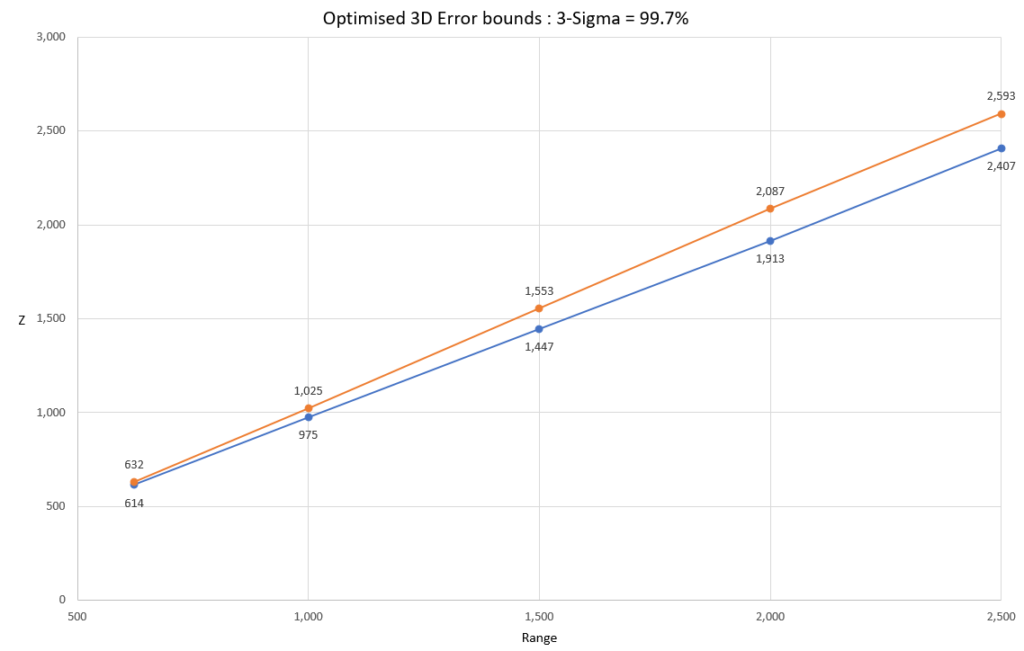

The 3-sigma error is:

what this shows is that at 1.5 metres, the coordinates of the 3D point will be between 1’447 and 1’553 from the camera 99.7% of the time.

Fixed SDK bug that returns more cameras than are connected.

With one camera connected, context.devices returns 2 devices, one with only a depth camera and one with both depth and colour sensors.

Changed startup so that cameras with only one sensor are ignored (with a warning)

Thanks for your response! I tried the debug version of the executable and it fires up just fine while the release exe still produces the old SNAFU window of error.

However, in the window that fires up from the debug exe, I notice that in the info window it says “No depth sensor” and “Two cameras detected” (Screenshot at: https://i.imgur.com/KGXJR2o.jpg) unlike 1 camera detected info in the screenshots you have posted in your tutorial.

I cannot be around the target that I had created right now, so I am unable to test further, but I hope this gives you more insight into what might be going on. Please let me know how I may be of better help in this regard.

Cheers!

I’ve compiled the Debug version, which should give a more detailed error message.

https://github.com/smirkingman/RealSense-Calibrator/tree/master/bin/x64/Debug

Could you try that and let me have the results?

Thanks for this promising post. Dissatisfied with the results from my D415 even after calibrating using the default calibration application, I am looking at your tool with great expectation.

However, I have hit a roadblock. While the viewer and other applications, including my python code detects the sensor easily, your calibration application is throwing some error. I have uploaded the screenshot of the error window to: https://i.imgur.com/VBdSNnc.jpg

I am trying to run the application on a Laptop having 64 bit Windows 10 OS. Please let me know if you need any further details.

Cheers!