A quick review of the D435 with pictures of real-life situations; I wish I’d had this when I was considering buying a D435.

Unboxing is a breeze. The D435 is small enough to hide in your hand, in a sleek aluminium case weighing in at 71.8g. It comes with a 1m USB3 cable and a cute, flimsy little plastic tripod. Amusingly, the 28.6g tripod isn’t up to the task of supporting the camera once the 41.2g cable is plugged in and it falls over; I’ve given it to my grand-daughter for her Lego. The standard camera thread mounting underneath works perfectly for a real tripod; for an aligned mounting there are 2 M3 tapped holes on the back, 45mm apart but they are only 2.5mm deep.

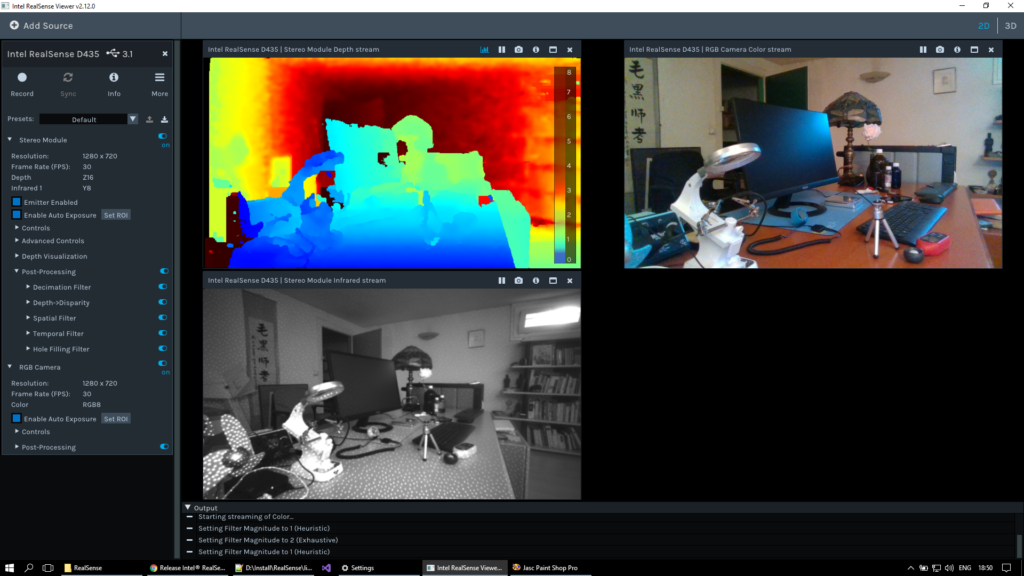

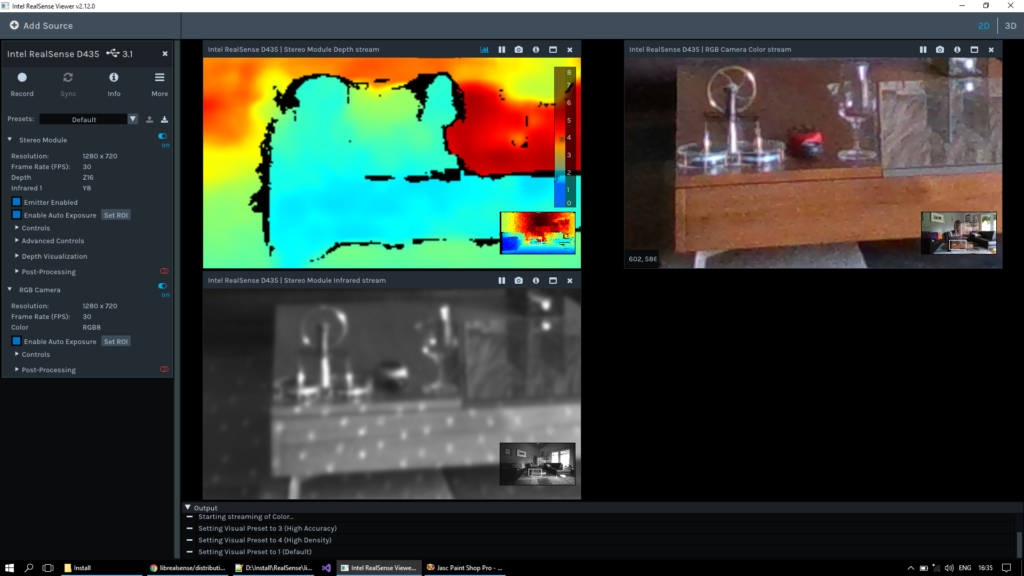

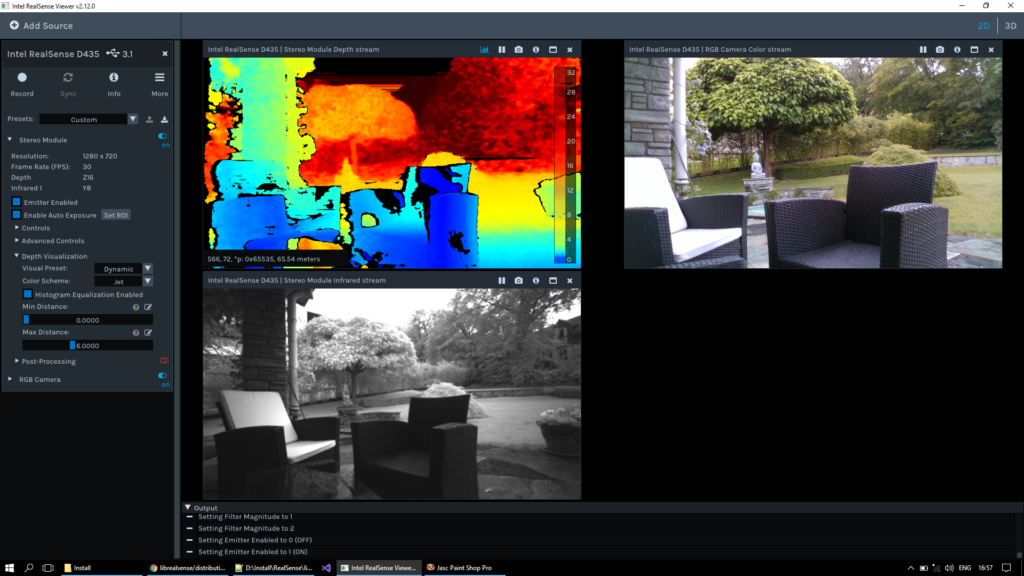

All the software is available on GitHub, quick-start with the Intel.RealSense.Viewer.exe to get this, depth at 1280×720:

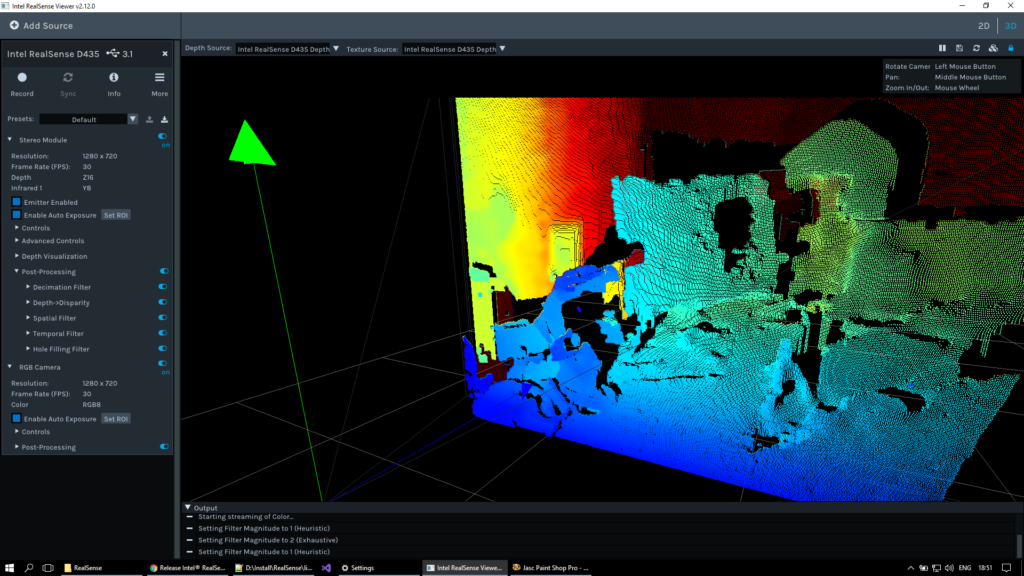

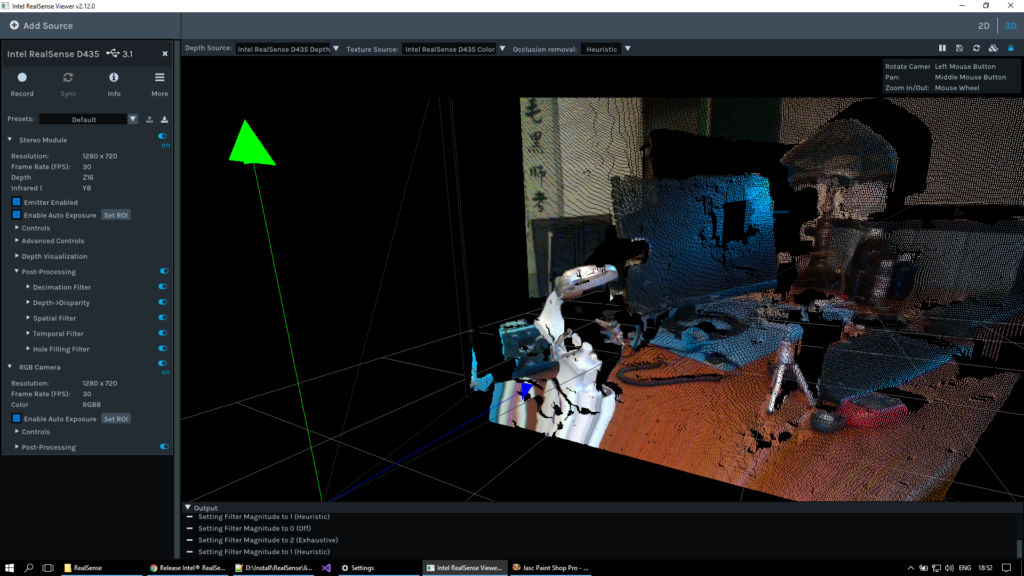

The toy tripod is in the foreground. Notice that I switched on the hole-filling filter, which is off by default. Switching to 3D with depth colours and quads:

and using the camera colours:

The red light meter to the right of the tripod reads 28 lux: I was astonished at the depthmap accuracy in such poor light.

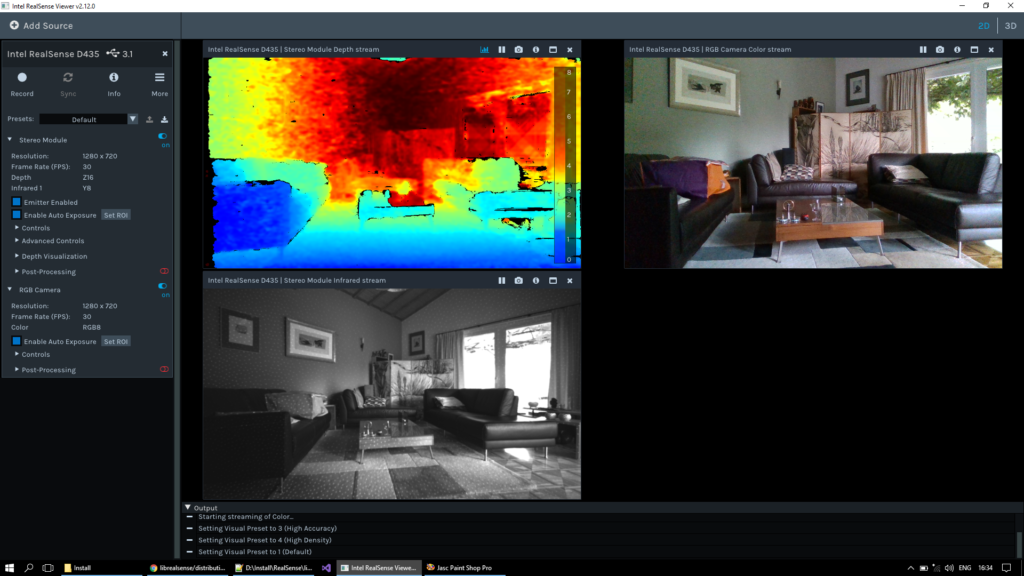

On of the major failings of depth cameras is reflections, so my next tests were with glass. Here, an interior, again the light meter in the foreground reads 15 lux:

The right-hand side of the table is glass and thus appears further away, which is normal. The wine glass next to the light meter is captured perfectly. The model on the front-left of the table is a tiny Sterling engine. The Viewer has a nice zoom function:

The stereo matching doesn’t get the inner details of the wheel, but the outline is well delimited.

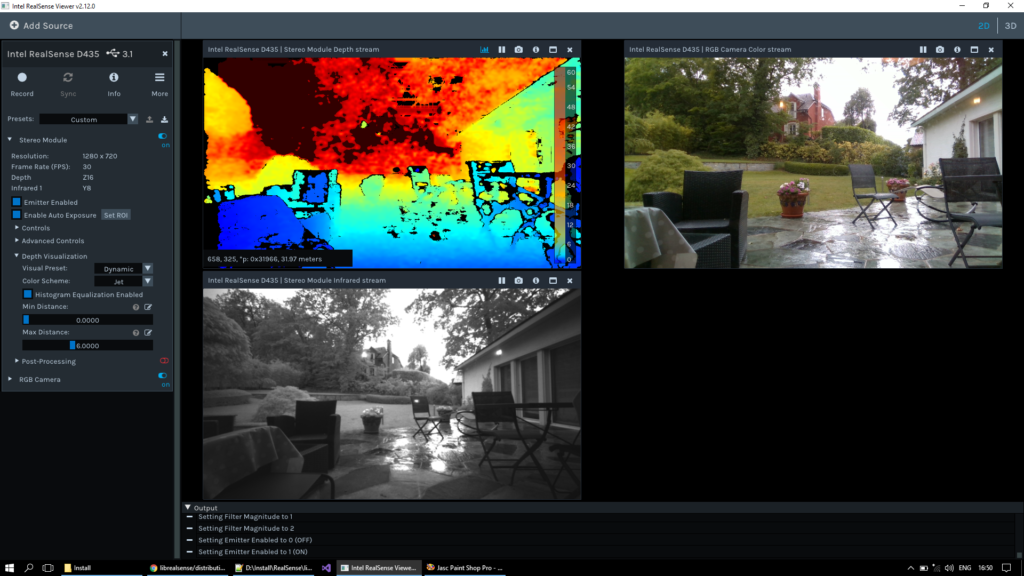

Next, looking outside from behind a window of 6mm + 15mm glass at 260 lux. My cursor was on the middle of the small hedge to the right of the wall, the 32 metres measured through 2 layers of glass in the depth stream seem quite reasonable:

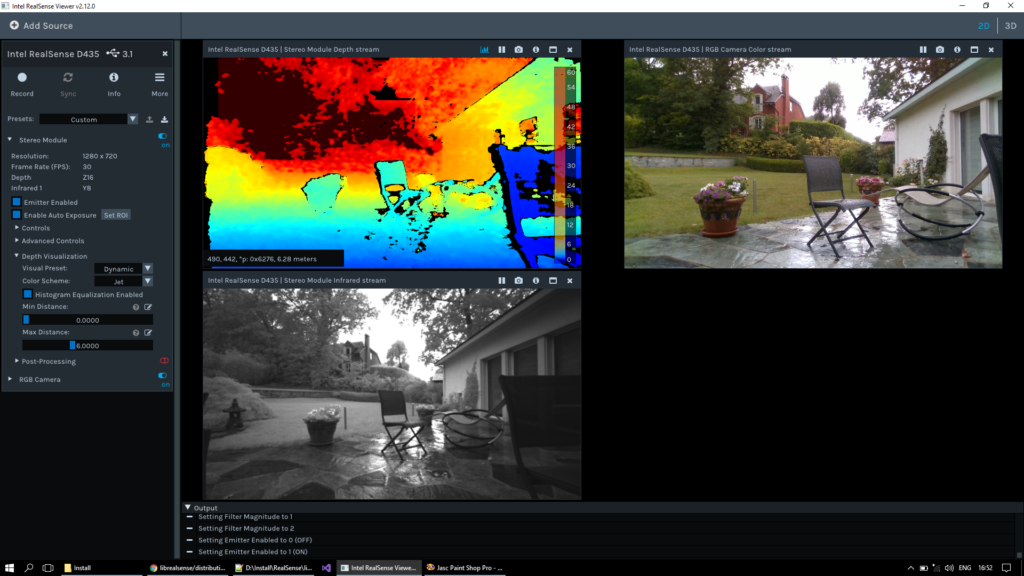

Stepping outside to 1’800 lux (and light rain), there are a few artefacts due to the reflection in the water in front of the chair and the window on the right appears to be at the same distance as the trees, which makes perfect sense as that is what is reflected. Notice that the depth images cover a greater area than the colour images. This scene is extremely unfavourable for a depth camera: poor light, reflections, textureless walls and rain:

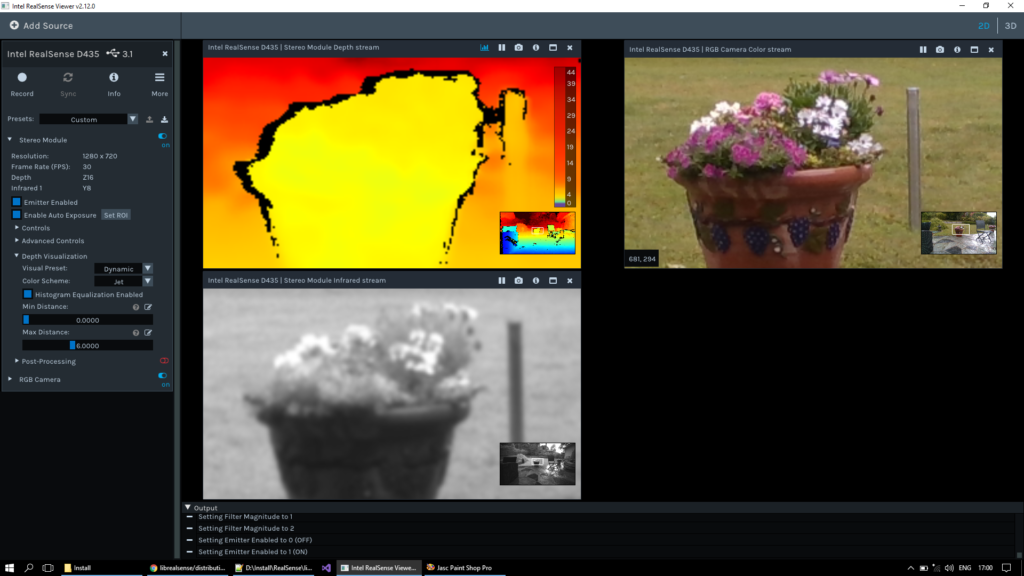

Zooming in on the flower pot in the foreground, it isn’t immediately clear if the flowers themselves are distinguished:

but a little image enhancement shows that they indeed are:

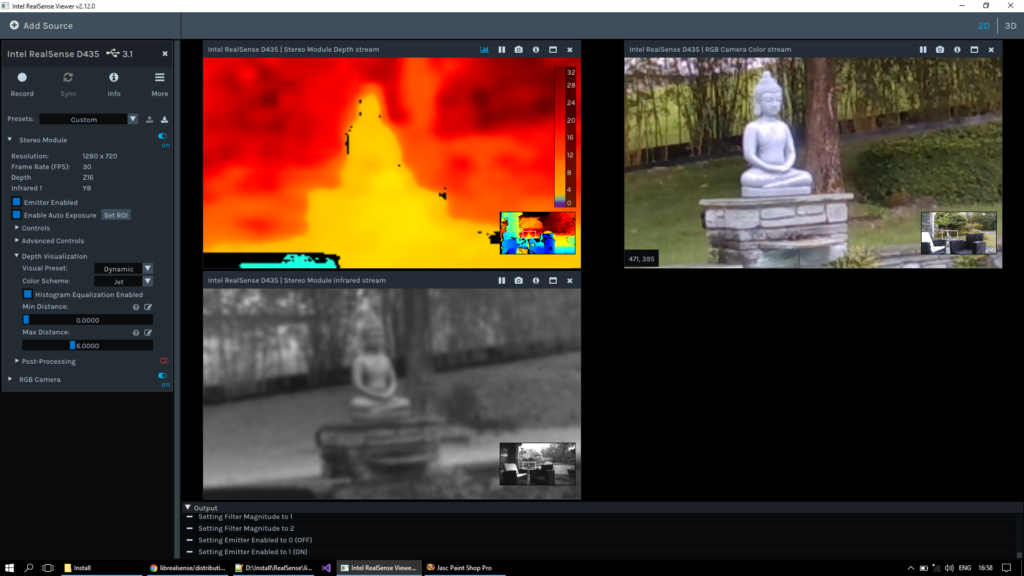

Panning left, there’s a buddha statue about 8 metres away. I positioned the cursor on the roof of the building above the Catalpa tree; the reading was 65.54 metres, which seems right (and is quite astonishing):

The buddha is clearly rendered in the zoomed depthmap, with only a handfull of dead pixels:

The camera button on the depthmap outputs the PNG, the RAW and the metadata:

Frame Info:

Type,Depth

Format,Z16

Frame Number,64305

Timestamp (ms),1528815686864.40

Resolution x,1280

Resolution y,720

Bytes per pixel,2Intrinsic:,

Fx,639.315613

Fy,639.315613

PPx,637.479370

PPy,362.691040

Distorsion,Brown Conrady

which is a nice touch.

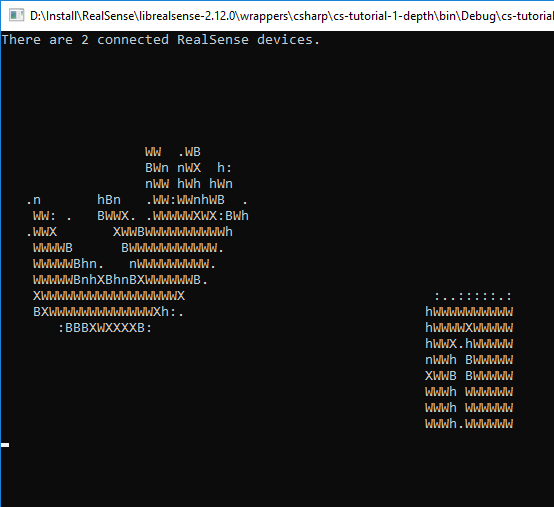

The SDK, librealsense-2-12.0 has the CPP sources for everything and wrappers for C#, Unity, OpenCV, PCL, Python, NodeJS and LabView. I tried the C# example SLN; after adding the reference to Intel.Realsense.DLL, they ran on first compile. The Depth tutorial generates a cute 70’s-style image made with characters (it’s my hand):

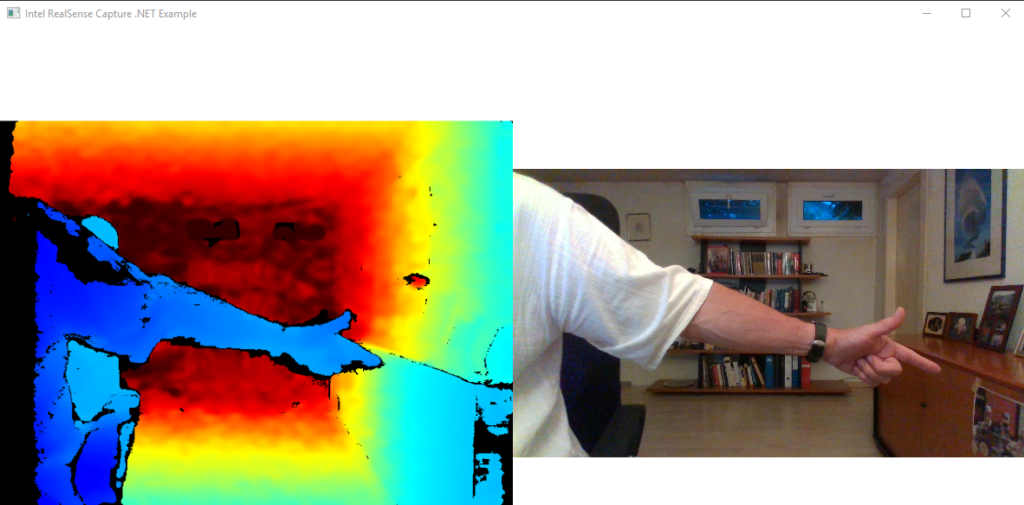

and the 2nd example – 100 lines of code – with depth and colour:

Conclusion

Over the years I have studied many depth cameras, particularly for outdoor use: Kinect, Stereolabs, SwissRanger, PrimeSense, Bumblebee, to name but a few. None were satisfactory, either because they were blinded by sunlight or cripplingly expensive. The D435 works perfectly both inside (with IR) and outside (with stereo matching); it is cheap, resilient and accurate. I think it is going to be a revolutionary game-changer in computer vision.

Sorry, I don’t have a point cloud to hand.

Yes, and I was a little disappointed in the accuracy.

I’ve been working on improving the accuracy with an alternative method of calibration and I’ll be publishing the solution soon.

Great report! Can you share a pointcloud file from a scan you did? Have you done an object and distance accuracy check; comparence D435 measurement result compared to hand measured with a tape?

Thank you. M